(Solved) : Build Planar Shapes Classifier Similar Discussed Lect1 Two Layers Neurons Tlu Type Computi Q43953922 . . .

![[1 1-1 0 0 1] -2 W • Example 2.1 ---- _Neuron #1----- =lo 0 re TLU #1 LO -1 -3] W, = [1 1 1 3.5] 02 TLU #2 TLU #5 TLU #3 TLU](https://media.cheggcdn.com/media/d8f/d8f59670-a620-4d44-9c0a-5c4166de6fda/phpkgZsCJ.png)

![E W2 = [1 1 1 1 3.5], x=[01 02 03 04 - 1], o=[05] First layer computes: o=[sgn(x,-1) sgn(-x,+2) sgn(x2) sgn(-x2 +3)]* • Each](https://media.cheggcdn.com/media/bef/bef50234-632e-4af8-9eae-c705ff8330e0/phpvwoD3I.png)

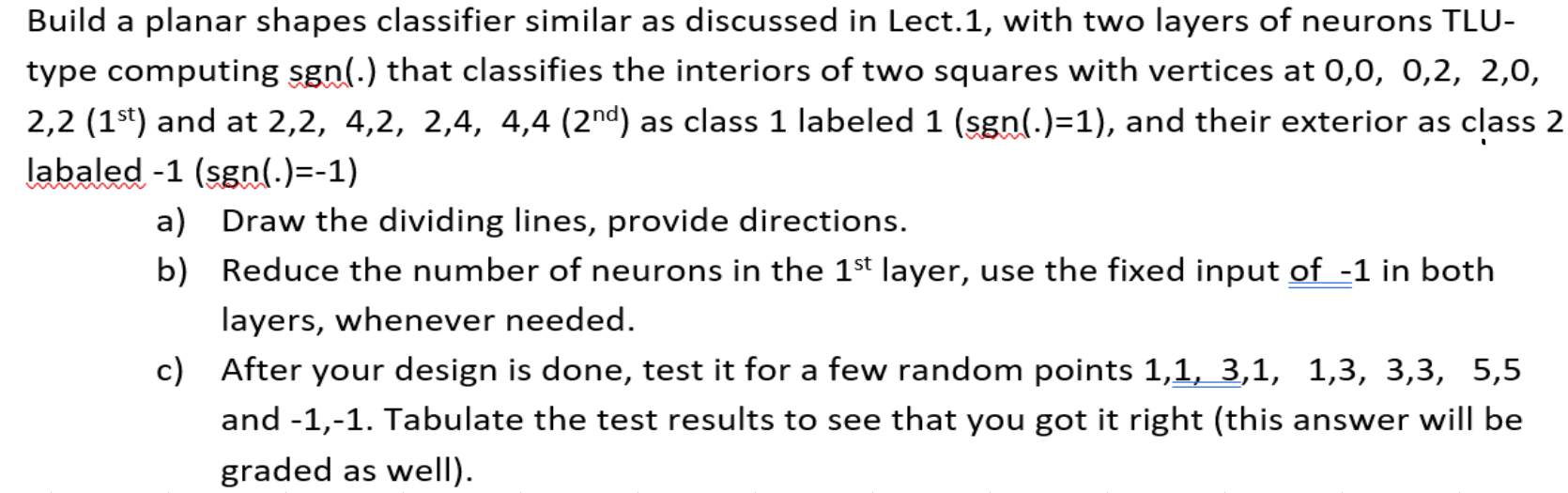

Build a planar shapes classifier similar as discussed in Lect.1, with two layers of neurons TLU- type computing sgn(.) that classifies the interiors of two squares with vertices at 0,0, 0,2, 2,0, 2,2 (1st) and at 2,2, 4,2, 2,4, 4,4 (2nd) as class 1 labeled 1 (sgn(.)=1), and their exterior as class 2 labaled -1 (sgn(.)=-1) a) Draw the dividing lines, provide directions. b) Reduce the number of neurons in the 1st layer, use the fixed input of -1 in both layers, whenever needed. c) After your design is done, test it for a few random points 1,1, 3,1, 1,3, 3,3, 5,5 and -1,-1. Tabulate the test results to see that you got it right (this answer will be graded as well). [1 1-1 0 0 1] -2 W • Example 2.1 —- _Neuron #1—– =lo 0 re TLU #1 LO -1 -3] W, = [1 1 1 3.5] 02 TLU #2 TLU #5 TLU #3 TLU #4 Layer 1 Layer 2 Input of Layer 1 Output of Layer 1 Input of Layer 2 Output of Layer 2 E W2 = [1 1 1 1 3.5], x=[01 02 03 04 – 1]’, o=[05] First layer computes: o=[sgn(x,-1) sgn(-x,+2) sgn(x2) sgn(-x2 +3)]* • Each TLU in Layer 1 defines a line in the input space Arrows → point to sides of the input space where each TLU neuron (numbered in circle) of the first layer responds with +1 Therefore 03=1 in the shaded area, and 05=-1 elsewhere We analyzed a planar pattern recognizer which responds +1 to all points in the shaded area 2.0 Pos’o ooo III Show transcribed image text Build a planar shapes classifier similar as discussed in Lect.1, with two layers of neurons TLU- type computing sgn(.) that classifies the interiors of two squares with vertices at 0,0, 0,2, 2,0, 2,2 (1st) and at 2,2, 4,2, 2,4, 4,4 (2nd) as class 1 labeled 1 (sgn(.)=1), and their exterior as class 2 labaled -1 (sgn(.)=-1) a) Draw the dividing lines, provide directions. b) Reduce the number of neurons in the 1st layer, use the fixed input of -1 in both layers, whenever needed. c) After your design is done, test it for a few random points 1,1, 3,1, 1,3, 3,3, 5,5 and -1,-1. Tabulate the test results to see that you got it right (this answer will be graded as well).

[1 1-1 0 0 1] -2 W • Example 2.1 —- _Neuron #1—– =lo 0 re TLU #1 LO -1 -3] W, = [1 1 1 3.5] 02 TLU #2 TLU #5 TLU #3 TLU #4 Layer 1 Layer 2 Input of Layer 1 Output of Layer 1 Input of Layer 2 Output of Layer 2

E W2 = [1 1 1 1 3.5], x=[01 02 03 04 – 1]’, o=[05] First layer computes: o=[sgn(x,-1) sgn(-x,+2) sgn(x2) sgn(-x2 +3)]* • Each TLU in Layer 1 defines a line in the input space Arrows → point to sides of the input space where each TLU neuron (numbered in circle) of the first layer responds with +1 Therefore 03=1 in the shaded area, and 05=-1 elsewhere We analyzed a planar pattern recognizer which responds +1 to all points in the shaded area 2.0 Pos’o ooo III

Expert Answer

Answer to Build a planar shapes classifier similar as discussed in Lect.1, with two layers of neurons TLU- type computing sgn(.) t…

OR